Make A Beat Drop On Garageband

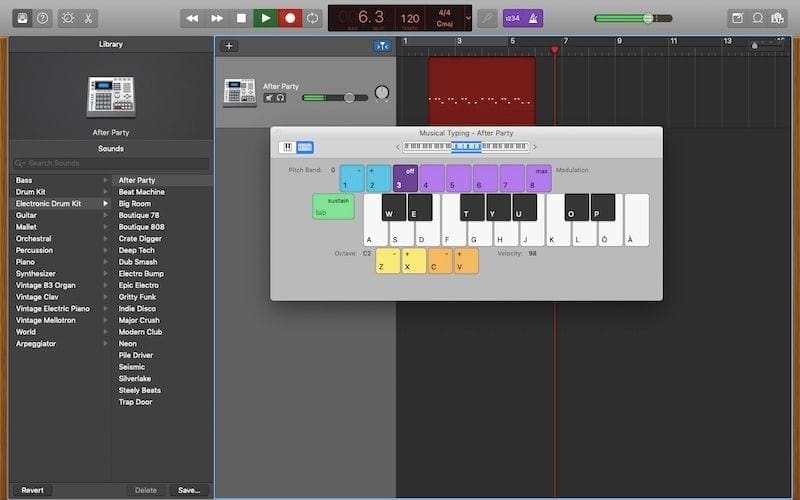

If you start to outgrow GarageBand, you can always move on to more powerful software later on. Start Making Beats Quickly With Templates. When you start a new project GarageBand, the software gives you a few options to get started. You can create an empty project, but there are faster ways to get started using GarageBand's included templates. In GarageBand, choose Share AirDrop. In the AirDrop dialog, do the following: Select Project, if you want to share a project that recipients can open and edit in GarageBand on another Mac. All audio-related settings are unavailable. Select Song, if you want to share an audio file mixdown that recipients can open and play in the Music app (or. 1) Choose an Apple Loop from Garageband’s massive loop library. 2) Load up the drummer track of your choosing, and switch the drum-kit to a hip-hop drum kit, such as the Trap Kit. 3) Load a sample of your choice into the workspace to fill out the track (optional) As I mentioned above, making a song in Garageband without using instruments. Steps for importing an audio file into GarageBand. First, make sure the file you want to import is in an accessible location (see above) Next, open GarageBand. Create a New Document. Locate the Audio Recorder (microphone) on the instrument browser and tap on the Voice option. Tap the Tracks View button.

Changing the key signature and pitch in Garageband is pretty straightforward.

1) Open your GarageBand file.

2) At the top-center of the DAW, you should see four icons in order from left-to-right: the beat, the tempo, the time signature, and the key signature.

3) Typically, GarageBand has for its default setting the most common key signature in music, C Major, or as it’s written in the DAW (digital audio workstation), “Cmaj.”

4) Click on the Key Signature – “C Maj.”

5) Change it to the desired Key.

6) The moment you switch the key signature and play the track, you’ll notice it sounds higher or lower. It’s that simple.

by the way, I have a list of all the best products for music production on my recommended products page, including the best deals, coupon codes, and bundles, that way you don’t miss out (you’d be surprised what kind of deals are always going on).

But the main problem with this simple method is that you’ll change the key signature for other MIDI instruments in the song, and you may not want this.

For instance, if your drum tracks have been created using a MIDI-keyboard like the Arturia KeyLab 61-key from Amazon which has the most value for the price, changing the key signature will likely throw the drums completely off, changing kicks to snares, high-hats to cymbals, and snares to shakers.

Although, in some cases, it leaves your drum-kits alone, depending on what kit you’re using.

If you’re interested in changing the key signature for just one part of the song, check out the section below.

By the way, if you’re new to GarageBand, it doesn’t hurt to turn on the “Quick Help Button,” that way you can you hover your cursor over whatever is in GarageBand, and the software gives you a quick run-down of everything. It’s on the top-left-hand corner. For the vast majority of my “How To Guides,” I’ll be referencing the name of things as titled by GarageBand.

How To Change The Key Of One Track Without Altering The Others

1) Click on the music in your track.

2) Copy the file by double-clicking it, or using the two-finger method on your Mac.

3) Once you’ve copied your file, save it, and then open a new project.

4) Click on “Software Instrument.”

5) Now, set your “Software Instrument” to what you were using before.

6) Copy and paste your music into the track region.

7) Click the Key Signature, and then transpose the track into a new key.

8) Copy your new music.

9) Now close this file and open up your other file from which you copied your track.

10) Open a new “Software Instrument,” and post your transposed music into it.

11) And voila, you’ve successfully changed the key of one track in your DAW.

If you want to change the pitch of ONE and not several “Audio,” tracks, whether it’s a guitar or microphone recording, and without altering the key signature of your other tracks, you have to do it another way, through a plug-in.

Important Things To Note

By modifying the key signature of your music in GarageBand, the pitch of the music will change either up or down, in accordance with a particular key signature. There’s a difference between changing just the pitch and the key signature.

Assuming you’re new to musical concepts, pitch refers to how low or high the note sounds. A high note means the sound wave is vibrating very fast, and a low sound means that it’s vibrating very slow. This is why when fast-forwarding a tape, the pitch of the sound is higher rather than lower.

Altering the key signature of a song transposes the music into a different key, so the music will sound the same, just at a higher or lower pitch, depending on to what key you’ve migrated. But if you switch music from a major key into a minor key, the tonality will shift from a happy-sounding to a sad-sounding piece of music.

Transposing music means to change the overall position of the music, thus, changing its sound.

Adjusting the pitch, on the other hand, is a slightly different beast. Changing the pitch of every note in a musical passage by one semi-tone, for example, will likely create dissonance.

Dissonance is a fancy word for, “it just won’t sound quite right.”

This is the case because the distance between notes changes depending on the key signature. For example, C to D is different by a full-tone, whereas E to F is just one semi-tone.

Looking at the keyboard above, going from C to C# is one semi-tone, (a half-step), whereas going from a C to a D is a whole-tone (full-step). This is just one thing that I learned from taking the piano course, PianoForAll, from their website a few years ago.

Thankfully, GarageBand comes with the ability to change the key of a specific passage when adjusting music from a MIDI keyboard or your laptop’s keyboard, rather than just adjusting the pitch.

How to Change The Key Signature (Pitch) Of An Audio Track

1) Double-click on your “Track Header,” and bring up where it shows your plug-ins down below in the “Smart Controls” settings.

2) Now go into your Plug-In options and choose the one that says, “Pitch.”

3) Choose “Pitch Shifter.”

4) Now you can select how by many semi-tones your “Audio” recording is either increasing or decreasing.

5)Make sure you turn the “Mix” option, up to 100%, that way it minimizes the original notes playing, and accentuates the pitch-corrected version.

Like I talked about in my guide on pitching vocals, the big problem with this, I find, is that it doesn’t sound nearly as good. It’s an imperfect transposition, because it changes every note by exactly a semi-tone or a full tone, rather than changing the notes so it fits in a particular key signature.

In other words, some of the notes will sound dissonant.

Another thing you can do is change the pitch using the “Pitch Correction function.”

How To Change The Pitch Using Pitch Correction

1) You do that by sliding the “Pitch Correction” bar to either the right or the left, depending on whether you want it to increase or decrease, although, there are a few other useful parameters of pitch correction that I’ve explored before.

While Garageband’s default pitch-correction is great, don’t get me wrong, a whole new world is opened up once you’ve tried Antares Auto-Tune Pro from Plugin Boutique, which is a way better tool in every way.

Another Way For Changing the Key (Pitch) Of Vocals.

1) Double click on your “Audio” vocals in GarageBand.

2) Open up a new “Track Header,” that says, “New Track With Duplicate Settings.”

3) Now go into where it says, “Voice,” on the left-hand side.

4) Click on, “Compressed Vocal.”

5) Now go into your “Smart Controls,” and select the option in the top of the bottom-right-hand corner that says, “Pedals Button,” when you hover over it with your “Quick Help Button” turned on.

It’s kind of hard to see, but I have the “Pedals Button,” circled with a black circle. It’s on the top-right-hand corner.

6) This will bring up a whole bunch of Analog-style pedals that people use when doing Analog rather than digital recordings.

7) Click on the Drop-Down Menu where it says, “Manual.”

8) Go down to “Pitch,” and change the pitch of the song to what you want. For the sake of an example, we’ll choose “Octave Up.”

9) That will make your vocals a lot higher, or you can choose one from the other 14 options listed.

That’s how you change the key signature or the pitch of your recordings in GarageBand. For the sake of clarification, I wrote a brief explanation of what key signatures exactly are, and how to go about using them.

It’s just an introduction, but it should help if you’re confused.

What Are Key Signatures And How Do I Use Them?

A key signature is a collection of all of the accidentals (sharps and flats) within a scale.

For example, a scale has seven notes. We’ll use the C Major Scale to illustrate the point.

The C Major Scale has 7 notes, beginning from C: C, D, E, F, G, A, B, and then C again.

From left to right, the scale is pictured below:

Assuming you’re a total beginner, the notes on top are the official musical notation, whereas, the numbers below are guitar tablature. There are serious advantages to learning how to read music as well as tablature if you’re a guitar player.

(I can’t lie to you, however, I’m a lot better at reading tablature, as I’m sure a lot of other people are).

Beside the treble clef, you’ll notice there aren’t any sharps (#) or flats (b). In the Key of C Major, there are none, but, if we were to transpose the key up to E Major, it would change.

In Key Of E Major, there are four sharps: F, C, G, D.

From the order of left to right, you can see there are four sharps, F, G, C, D. The scale below is an E Major scale written in the corresponding tablature for the guitar.

Technically, this piece of music is written so that it’s still in the Key Of C because there aren’t any sharps and flats written beside the Treble Clef, which is the thing that looks like a sophisticated G on the left side of the 4/4 symbol (time signature).

Now, I’m going to write the music so it’s in the Key Of E Major. Beside the Treble Clef in the image below, I’ve put a circle around the four sharps, which indicate this piece is in the Key Of E.

Whenever you see 4 sharps before a piece of music, you know it’s in the Key Of E.

Also, you can see that you don’t have to write the sharps beside the music notes anymore. There’s no need for that because the music reader knows we’re in the Key Of E, therefore, anytime you play an F, C, G, or D, those notes have to be played one semi-tone higher.

There’s nothing overly sophisticated about a Key Signature. Essentially, it’s just a way of communicating the range in which the pitch of the song lies.

In other words, you know how high the song is on your guitar fretboard, or you know how high it is up on the piano keyboard.

What Is The Purpose Of A Key Signature

The purpose of a key signature isn’t to confuse up-and-coming musicians, although, I know it can feel that way.

The purpose of the key signature is, essentially, to minimize the number of sharps (#) and flats (b) written in a piece of music. If there were no key signatures, a composer would have to write a sharp and flat on many of the notes, which would be pretty time-consuming.

Conclusion

This is just a very brief introduction to key signatures in music theory. If you want to learn more basic theory, I recommend heading over to musictheory.net, as well as picking up a copy of Mark Sarnecki’s book, The Complete Elementary Rudiments, which you can read about in my post of all my most recommended products.

Mixing beats in Garageband is simple.

There are just a few things that need to be done to make a beat sound great, including setting up the automation; adding dynamics processors; setting the VU meters to the appropriate volume, panning, adding effects, and then putting it through the mastering phase, including an additional compressor, channel EQ, and limiter on the master channel.

by the way, I have a list of all the best products for music production on my recommended products page, including the best deals, coupon codes, and bundles, that way you don’t miss out (you’d be surprised what kind of deals are always going on).

In terms of numbers, I would say that it took me around 80 beats until I finally figured out how to get everything to sound at least as good as the competition.

In this tutorial, I’m going to show you how to mix a beat in Garageband, using a few different tactics. First things first, I’m going to offer a summarized step-by-step check-list that gives you an idea of what to do.

From there, we’ll explore each part of the process in detail.

To mix a beat in Garageband:

1) Drop all VU meters to -15dB

2) Adjust each VU meter so it sounds good without clipping or passing +0dB

3) Use Compressor, EQ, and effects presets

4) Add Automation and Panning

5) Export the Track as a .aif file.

6) Drag it into a new project for mastering

1) Drop the VU meters of each individual track down to -15dB

Other than using a much better 808 plugin like Initial Audio’s 808 Synth from Plugin Boutique, this is one of the most important things that I wished I would’ve known about earlier because it would’ve saved me a lot of time and energy. The sub-title is self-explanatory.

What you have to do is drag and drop each software instrument track down to about -15dB.

The idea behind this is you’re setting up the music in such a way where you now have plenty of room to decide where instruments fit into the mix, without clipping or distortion.

Moreover, this is going to give you plenty of space to allocate each instrument in the mix.

Another way this will help you is in the mastering phase.

When I first started mixing beats in Garageband, I would have parts of the track exceeding +0.0dB and I’d wonder why it sounded bad. You always want to make sure that your tracks aren’t exceeding this level, because +0.0dB is the point of distortion.

It’s the digital floor of the DAW, in other words, it’s the ceiling you shouldn’t pass.

If you don’t adjust your VU meters in this way, and you don’t keep them as low as possible, you’ll find that when you re-insert the track back into the workspace, some of the tracks will cause clipping, particularly at the loudest part.

Some of the biggest offending instruments are typically parts of the drum-kit, for instance, the kick, snare, clap, or the cymbals, like the ride, crash, or hi-hat.

It’s important to pay attention to how your automation is adjusted as well because if you increase the sound of the music at certain points of the track, you’ll find that some of these offending instruments will exceed the acceptable threshold.

2) Drag the VU meters of each track to the desired level, without causing clipping, and definitely without exceeding +0.0dB.

At this stage, it’s really up to the producer to decide where each VU meter needs to be set at in dB. I can’t do it for you. Just adjust the volume of each track so it sounds good to your ears.

For the most part, I just want each instrument to sound good with the other, without overpowering anything.

The loudest instruments, obviously, should be adjusted so they’re at the lowest end of the VU meter, for example, -15dB, while the others can go up to around -5dB if need be.

Remember, you want each instrument to sound clear and distinguished, without being overbearing or annoying.

Also, the VU meters should never be going into the red on any part of the track. During the loudest part, they can hover in the yellow.

3) Set up your dynamics processors on each track, including the Compressor, Channel EQ, and the Noise Gate

For this part, the three most important plug-ins you can set up on the instrument tracks are the Compressor, Channel EQ, the Noise Gate, and perhaps, a Limiter.

Obviously, there are other processors you could add to the instrument tracks, however, these are the necessary ones that make all the difference in terms of how your music will sound in the final product.

How you set-up these plug-ins really depends on the instrument itself, as well as the purpose of it. For instance, if you’ve recorded some guitar parts for the beat, it would definitely be a wise decision to add a compressor on to it, depending on whether or not it’s distorted.

As a general rule, you want the order of the plug-ins to be Noise-Gate > Compressor > EQ > Effect > Limiter.

Compressor

I typically use Garageband’s pre-sets as a start-off point in terms of how I adjust the parameters of the plug-in. For example, if you’re using a compressor for an acoustic guitar, the Acoustic Guitar pre-set is going to make a world of difference.

It’s not uncommon for me to increase the gain, jacking up the ratio just a little bit, and also the threshold. For the most part, I’ll change these three settings of compressor the most, with the gain being the primary culprit.

As I explained in my article on compression, increasing the gain of the compressor is going to be what makes the output signal much louder. The ratio is adjusting the strength of the compression, and the threshold adjusts how much of the signal is actually adjusted by the plug-in.

Another great trick for the use of the compressor is parallel compression (my guide as well).

In case you don’t want to click the link, parallel compression, in this context, just means your copying the desired instrument into a new software instrument track, with the one having compression on it and the other one going without it.

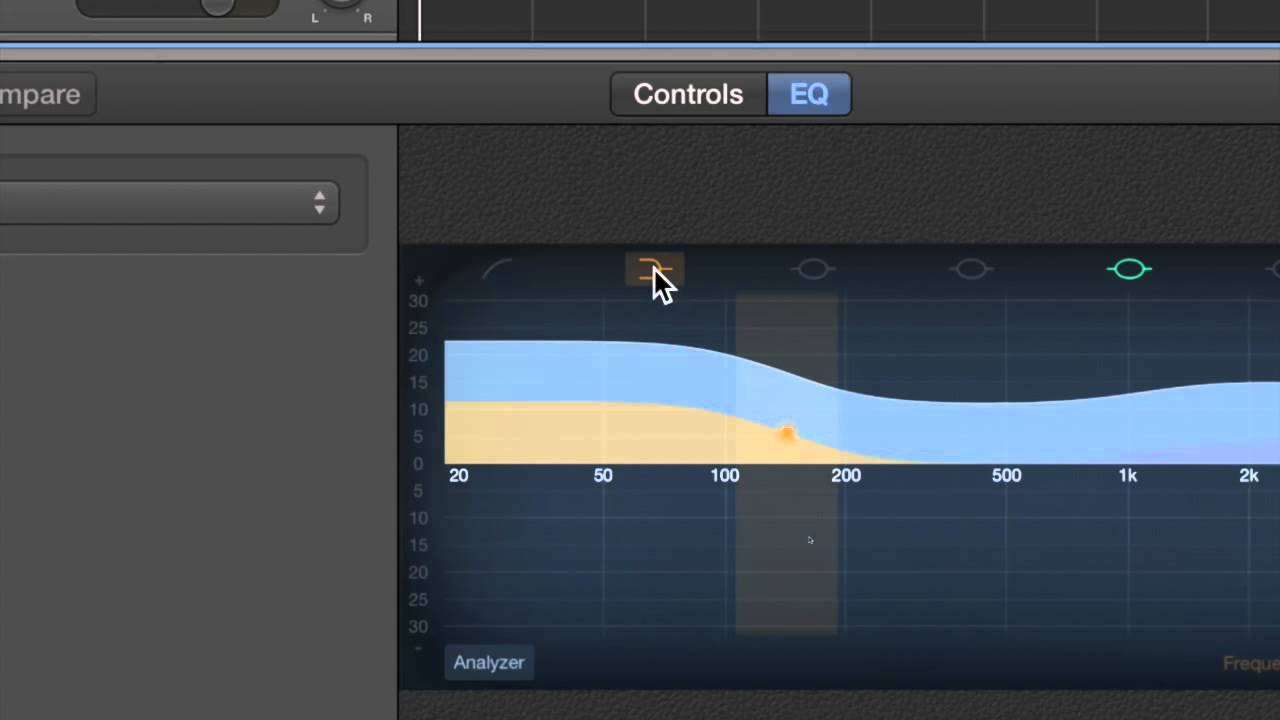

Channel EQ

The same principle for the compressor applies to the Channel EQ plug-in as well. What instrument you’re making changes to is ultimately going to be the primary factor of how you adjust the EQ, and what frequencies you’re increasing or attenuating.

When it comes to EQ, however, I find that attenuating (decreasing) the mid-range frequencies, especially at 200Hz, is almost always a must, due to the fact most instruments have at least part of its frequency range in this area.

Moreover, you may need to adjust the sub-bass EQ and high-range EQ. Like I just mentioned, what instrument you’re changing, for the most part, is going to determine how you go about this.

When it comes to the guitar, I almost always attenuate some of the mid-frequencies by a little bit, set up a high-pass so the lower frequencies are eliminated, and then I boost the high frequencies from 1000kHz to 10,000 kHz, with the sparkle increased at 10,000kHz (my guide on EQing guitars).

Use the pre-sets as your guiding light in the darkness. The pre-sets are meant to give you an idea of how parameters are adjusted for particular functions, and they’re honestly quite good.

For instance, the vocal pre-sets often go along with the industry standards of what some of the more authoritative sources will tell you to do.

Like the vocals. When it comes to the vocals, you’ll want to scoop the lower frequencies and attenuate the mid-range by a little bit, but boost the highs. This is for a male vocalist with a lower voice.

The low frequencies of a woman’s voice, on the other hand, don’t need to be attenuated so much due to the higher pitch of the female singing voice. The fundamental difference in EQ between the male and female singer is the attenuation of lower frequencies.

For the male, you scoop them out like I talked about in my other tutorial.

For the woman, you leave them as they are, or attenuate them even less, depending on how high her voice is.

Some additional tips for the EQ are to eliminate some of the “sss” sounds of the instrument tracks, for instance, a common culprit is the higher frequencies of the hi-hat or another cymbal.

Commonly, I’ll decrease some of the higher range frequencies of the instruments that exist primarily in the aforementioned ranges, between 5000kHz and 10,000 kHz.

Like I said above, use the Channel EQ pre-sets to guide you in your decision. One of the great things about Garageband and Logic Pro X is the pre-sets. They have a pre-set for nearly every single instrument. They aren’t to be followed 100%, but they’re an excellent place to start.

Noise Gate

The Noise Gate is a lot simpler for a number of reasons, mostly due to the lack of sophistication, although, it depends on what type of noise gate you’re actually using.

If you’re using the Bob Perry Noise Gate plug-in, there are more sophisticated parameters. Assuming you’re using the regular noise-gate plug-in, however, you can only adjust by dB and that’s it.

What I commonly do is adjust the Noise Gate so it eliminates as much extraneous noise as possible, without decreasing the amount of sustain of the instrument, and without making it sound choppy. Experiment with what you think sounds best.

I find the range between -65dB and -40dB to be the most appropriate.

4) Add the desired and necessary effects, such as distortion, delay, reverb, ambiance, flangers, phasers, etc.

I would say that the most commonly applied effects plug-ins, at least in my world, are the reverb, ambiance, distortion, and the delay. In some cases, I may find it necessary to throw in a flanger or a phaser in there.

The reverb and ambiance plug-ins are going to make the music actually sound good, for the most part. They make all the sounds and instruments mesh together. They add a “juicy” quality to the music, that actually gives it that feeling.

The distortion is another effect that I use on many different instruments and for different reasons. For instance, I may add just a tiny bit of drive/distortion to the kick, snare, the boutique 808, or even a bit more crunch on the guitar, depending on what kind of guitar it is.

For the most part, however, I add distortion to the aforementioned instruments the most, the kick, snare, and the boutique 808.

For this, I would recommend the FuzzPlus3 plug-in, which is great for adding a subtle amount of drive to whatever instrument calls for it. It’s great for the boutique 808, among other instruments, because it has specific parameters that allow for solid customization.

Delay is another effect I commonly use. I almost always use delay, for instance, on the vocal track as well as the piano and guitars.

The delay, when used sparingly, can “thicken up” the sound, so to speak, bringing it forward in the mix, and giving it more of a professional sound.

The delay is also instrumental in the Haas trick which I’ve talked about before. Delay can be used as more than just a thickening agent. It can also be used as a straight-up effect.

5) Add Automation to the tracks that need it, whether it’s just volume automation or Channel EQ automation

Automation, a topic I’ve explored at length, is great for adjusting the dynamic range of a track.

Through the use of volume automation, you can increase or decrease the intensity of particular parts of the track, depending on what your goals are with the music.

For instance, you may want to slightly increase the master channel volume by 1-2dB right before a chorus to build intensity. Conversely, you could do the exact opposite as well, say for example, if you wanted a particular instrument to be a bit softer in a specific section of the song.

Bringing up the automation function in Garageband is as simple as just hitting ‘A’ on your keyboard.

Another important point to mention is that the automation controls will act responsively to the number of plug-ins you have set-up in the Smart Controls.

In other words, if you have 7-8 different plug-ins running on an individual track, you’ll notice that you now have the option to automate those plug-ins through the automation function.

You can see what I’m talking about in the image below:

With all that said, I also think it’s worth mentioning that automation can be time-consuming, especially if you’re not careful.

For instance, you have to pay careful attention to by how much you’re increasing or decreasing the volume because you don’t want to cause clipping or distortion.

In some cases, you may not even notice that you’ve increased the volume up to the point of distortion until after you’ve inserted the track into a new project file for DAW-mastering purposes.

If you do find yourself in this situation, you’ll have to go back to the original mix and re-adjust all of the parameters and settings you made. Tread carefully with automation and be mindful of what you’re doing.

6) Pan each instrument track to where it needs to be.

How To Do A Beat Drop On Garageband

While I would like to say that panning is one of the most important parts of mixing (in fact, I did say it in my panning guide), you can’t really say that because everything works together cohesively, and you need one aspect of the process to work together with the other.

When one thing is missing, it’s not going to sound right, regardless of what it is.

Panning is just the process of directing the sound of the instrument to the appropriate place. In other words, you’re adjusting the sound’s location in the mix.

You can see what my panning technique looks like below:

For the sake of convenience, I’ll briefly offer a guideline in this article to save you time.

Another important thing to mention is that, in the following guide, you want to make sure that two instruments aren’t in exactly the same position. For example, if you want the clap and the hi-hat to the left, you want the clap to be slightly more left than the hi-hat or vice versa.

Moreover, a good practice is creating room in the mix for the rapper. So, when panning the melodic instruments of the song, have them panned to the right and left a little bit, that way there is room for the artist to sing or rap.

Kick – In the middle or near-middle

Snare – Slightly to the right or the left

Hi-Hats – Panned to either left or right

Cymbals – Panned to the left or right, but not in the same position as the hi-hat.

Claps – Also panned to the left or right.

Boutique 808s or Bass – Panned to Center

Melodic Instruments – Panned slightly to the left, and slightly to the right.

7) Export the Track as a .aif file, and then drag and drop it into a new project file.

At this stage, you’re done everything.

You’ve set up the effects, dynamics processors; you’ve panned the instruments to the right place in the mix, you’ve made sure all of the VU meters are where they should be; you’ve ensured nothing is clipping, and you’ve also made sure that no plug-ins are running on the master channel.

What you do now is export the track as a .aif file, send it to your desktop, and then open a new project file and re-insert the track into a new project (use my guide for reference if you’re having difficulty).

How To Make A Beat Drop On Garageband

8) Add a Channel EQ, a Compressor, and a Limiter to the Master Channel of the track, and increase the Master Volume to +2.5dB.

What you can do now is add the finishing touches to the track, including the Channel EQ, Compressor, Limiter, as well as the Master Volume (Fab Filter has the best mastering plugins on Plugin Boutique).

I would add the plug-ins in the following order, Noise Gate > Compressor > Channel EQ > Limiter, from top to bottom.

For the EQ, you just want to eliminate any undesirable sounds in the mix or increase the number of highs in it. For instance, you may find that the sub-bass frequencies need attenuation just by a little bit, in addition to a bit of the mid-range.

Moreover, you could drop the high-frequencies in the 10,000kHz range by just a little bit to eliminate the “ess” sound that some of the other instruments might be causing.

For the channel EQ, I might use settings like what’s shown in the image below:

For the compressor, I would say that the Platinum Analog Tape is arguably one of the best to use. It’s a personal favorite of mine.

Make A Beat Drop On Garageband Roblox

For the Limiter, you could just increase the gain of the track by +2.0dB and then adjust the output level to -0.1dB. This is going to set a ceiling for the song and also increase the gain of it by a little bit.

At this point, now everything is finished, and you can go ahead and increase the master channel volume by the desired amount. For this stage, try and think of a volume that you think is consistent with some of your favorite music, and then always keep this volume in mind.

For instance, I typically increase the volume by +2.0dB – +2.5dB. This is a good range that will be competitive with the other music out there, however, another great thing to do is to use a metering plugin like Reference 2 from Plugin Boutique. This will take the mystery out of the process and you’ll know exactly how loud something is without guessing.

You may find yourself wanting to increase the volume as much as possible, but it’s best to be careful. The human ear always thinks that louder is better when it comes to music.

But if you increase the volume of the track by too much, you’ll end up with a song that’s too loud to the point where streaming platforms will decrease the volume anyway.

Some people also call this the “loudness war,” because many engineers nowadays want their music as loud as possible, that way it seems like more of a banger than the other music out there.

Other Important Tactics

- Mix in Mono

Mixing in Mono is going to centralize all of the sounds into one channel. It’s useful because it will show you how your music will sound in a mono-stereo system, which, to many engineers, is a crucial part of the process (my guide).

Once you switch the track back to Stereo, by turning the ‘Convert to Mono’ option off in the Gain plug-in, you’ll notice how much better it sounds. As a general rule, it’s a great practice to mix in mono.

- Mix with Low Volume

As I explained in my 24 tips to mixing tutorial, mixing in low-volume is a great practice, in my opinion, not only because you won’t do as much damage to your hearing, but also because of the way the human ear is structured and how it responds to sound.

YouTube Video

Conclusion

I hope this was helpful to you. Do me a favor and share this on your social media. That would help me out a lot.